Apple’s WWDC 2024 introduced various innovative features through Apple Intelligence, designed to make devices more powerful, personal, and intuitive. From Siri’s on-screen awareness to Genmojis, Apple Intelligence promises to transform how users interact with their devices. You can explore these exciting updates in Apple’s official video.

However, the standout feature is Personal Context Understanding. This feature delivers a highly personalized experience tailored to each user’s unique needs by tapping into user data like messages, calendar events, and location.

As Uncle Ben wisely said, "With great power comes great responsibility" and with AI comes data usage and privacy issues. In this blog, we will expand on Personal Context Understanding and discuss how Apple promises to ensure user data privacy within these new features.

Personal Context Understanding

At the heart of Apple Intelligence lies its ability to understand and utilize personal context. This feature sets it apart from other AI systems and makes it truly "personal intelligence."

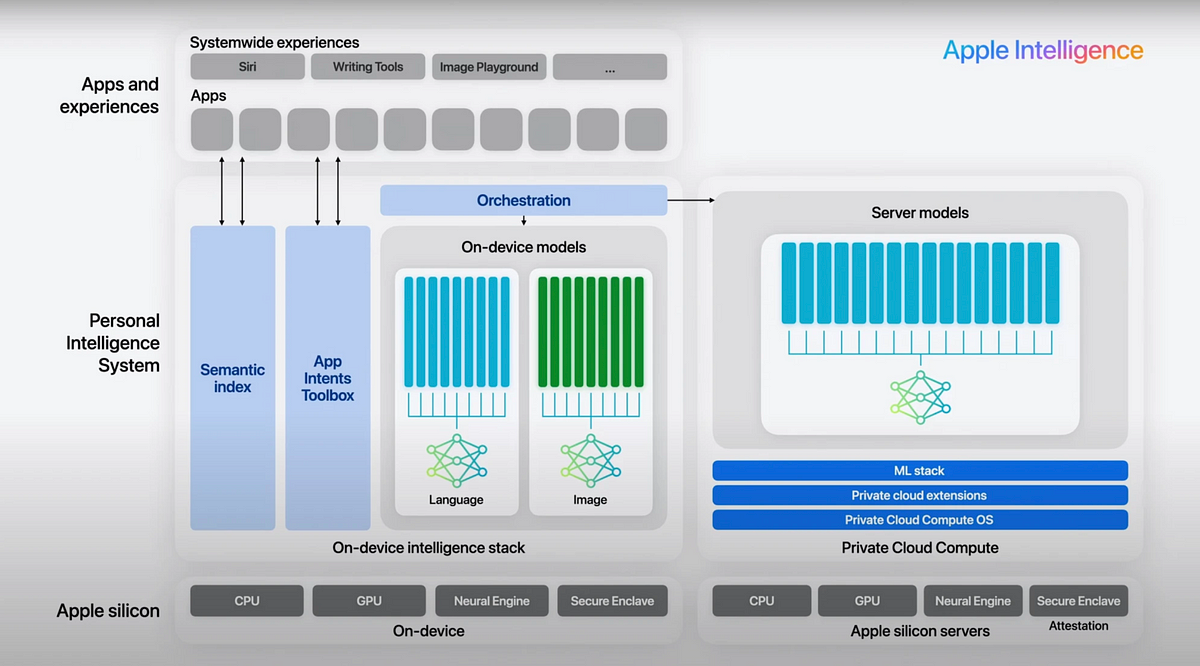

Apple Intelligence employs a sophisticated on-device semantic index that organizes and surfaces information from across your apps. This index allows the system to quickly retrieve relevant personal data when needed, without storing or analyzing this data in the cloud.

The power of this semantic indexing was demonstrated during the WWDC 2024 presentation by Craig Federighi. He showcased how Apple Intelligence could process multiple pieces of personal data to provide context-aware insights:

When Craig's meeting was rescheduled for later in the day, he wondered if he would still make it to his daughter's play performance on time. Apple Intelligence seamlessly processed various pieces of information:

- It identified who Craig's daughter was

- Retrieved the play details she had sent a few days earlier

- Considered the time and location of the rescheduled meeting

- Predicted traffic conditions between his office and the theater

This example illustrates how Personal Context Understanding uses data like messages, calendar events, locations, and traffic predictions to offer personalized and timely assistance, making it a truly intelligent system.

Privacy at the Core of Apple Intelligence

While these new features are undoubtedly exciting, privacy is central to their design. As Craig Federighi emphasized, "You should not have to hand over all the details of your life to be warehoused and analyzed in someone’s AI cloud." Apple Intelligence processes personal data on-device, meaning your information stays on your device, not in the cloud.

Even when more computational power is required for certain tasks—like when larger models are needed—Private Cloud Compute steps in. This allows Apple to use server-based models while ensuring your data is never stored or accessible to anyone. Apple uses cryptographic techniques to ensure that only servers running authorized software can process your data, adding an extra layer of protection.

What Is Private Cloud Compute?

Apple’s Private Cloud Compute (PCC) addresses the challenge of processing tasks that require more computational power than a user's device can handle. For tasks that exceed the capabilities of an iPhone, iPad, or Mac, the device securely sends the request to PCC nodes, which are specially designed-cloud servers.

Source: Apple

Secure Hardware: Apple Silicon and the Secure Enclave

Apple's Private Cloud Compute system is not just about software. It’s built on Apple silicon, leveraging the same hardware security features present in devices like the iPhone and Mac. Each PCC node contains a Secure Enclave Processor (SEP), a specialized hardware component that handles cryptographic operations, including the management of encryption keys.

The SEP ensures that sensitive information, such as the private keys required for decrypting data, is stored securely and never exposed to external systems. It also enforces Secure Boot, ensuring that only Apple-approved, signed software can run on the PCC nodes.

Encryption: Protecting Data In Transit

Before your data is transmitted to a PCC node, it’s secured using public key cryptography. This process ensures that your data is encrypted from the moment it leaves your device. Specifically, your device encrypts the request using the public keys of the PCC nodes. Only the corresponding private keys, securely stored in the PCC servers, can decrypt the data.

End-to-end encryption ensures the data remains secure during its entire journey across networks. Even intermediate services such as load balancers or routers cannot decrypt the data, as they do not possess the necessary keys. This eliminates the risk of interception, ensuring that only the PCC node can process your request.

Stateless Computation and Data Erasure

In Private Cloud Compute, your data is processed only while your request is being fulfilled. Once the response is sent back to your device, all personal data is erased from memory. The data is only temporarily used by the PCC node, existing in memory strictly for the duration of the request. Notably, no logs are kept during or after processing, ensuring no trace of your request is left on the server. This ensures that no one—including Apple staff—can access your data once the task is complete.

Verifiable Transparency and Attestation

To ensure trust, Apple has taken an extraordinary step in making PCC software images publicly available. Independent security researchers can inspect the software running on these nodes to verify that Apple’s privacy claims hold up. This transparency allows for continuous verification, ensuring that PCC nodes are behaving as expected.

Furthermore, each PCC node must provide cryptographic attestation to prove it’s running authorized software. Your device will only send encrypted requests to nodes that can verify they are running the correct software version. This ensures that compromised or unauthorized servers cannot access your data, as they wouldn’t be able to prove they are legitimate.

By combining code signing, attestation, and public software transparency, Apple establishes an ecosystem where users and experts can verify their privacy is respected.

Handling Privacy in Third-Party Integrations: ChatGPT Example

With the integration of ChatGPT into Apple Intelligence, Apple enables users to tap into the power of this language model for creative tasks, content generation, and more. However, since ChatGPT is operated by a third party, privacy concerns have surfaced, and even Elon Musk sparked controversy, claiming, “Apple has no clue what’s actually going on once they hand your data over to OpenAI. They’re selling you down the river.” Musk went on to criticize Apple, stating that using terms like “protect your privacy” while partnering with OpenAI was misleading.

In response to these concerns, Apple has implemented privacy measures aimed at protecting user data during such third-party integrations:

- Explicit User Consent: Before any data is sent to ChatGPT, users must explicitly consent to share it. This ensures that users are fully aware when their data is leaving Apple’s ecosystem and being processed by a third party.

- Data Minimization: Apple shares only the necessary information with ChatGPT. For example, if you ask for content generation, only the specific prompt related to that task is shared, limiting the amount of data exposed to third-party models.

- Clear Communication: Apple ensures users are informed when data is shared with a third-party service like ChatGPT. This transparency allows users to make informed decisions about their privacy.

- No Guarantee on External Privacy: Once the data reaches ChatGPT’s servers, it’s subject to OpenAI’s privacy policies, which Apple cannot control. Users should be aware that third-party services may handle and store data differently than Apple.

By applying these principles, Apple balances the need for powerful third-party integrations with a commitment to keeping user privacy in focus.

Conclusion: Promising Features, but Real-World Performance Awaits

Apple’s WWDC 2024 unveiled many new features, from Siri’s contextual conversations and advanced writing tools to generative image creation and third-party integrations like ChatGPT. These advancements have the potential to transform how we interact with our devices, making them smarter, more personal, and deeply integrated into our daily workflows.

However, these features are still promises for the future. While the technology is exciting, its real-world performance remains to be seen. Apple’s approach to privacy, especially through innovations like Private Cloud Compute, sets a new industry benchmark. Apple is demonstrating that powerful AI can coexist with rigorous privacy standards by processing data securely on-device and ensuring that server-based models never retain or access personal information.

As these updates roll out, time will tell if they meet performance expectations and succeed in shaping a future where technology respects user privacy without compromise.